This past week we had an intense educational experience here at the Alperovitch Institute: 5 hours of Malware Analysis and Reverse Engineering with Juan Andres Guerrero-Saade, every day, all week, including Saturday (Monday was a holiday). The class was a first in several ways: we had never taught malware analysis at SAIS Hopkins. It was our first professional skills class in this format. But the most stunning novelty was the use of ChatGPT in the classroom.

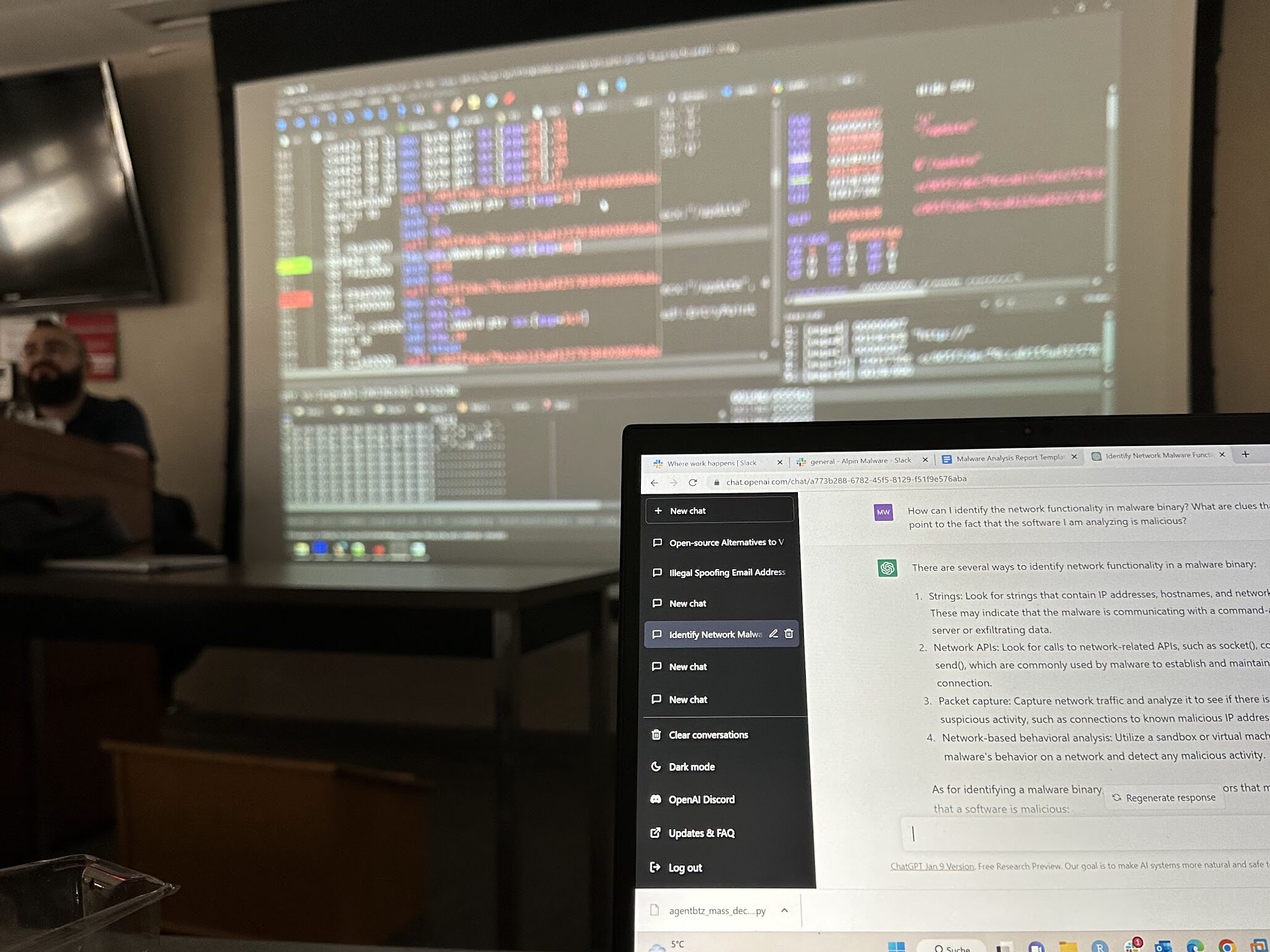

Juan Andres asked the students about a week ahead of time to register an account with OpenAI. Signups were waitlisted then, but most got an account in time. Then there we were, in our darkened classroom, code on screen, virtual machines running, ready to delve into core concepts of reverse engineering, into static and dynamic analysis, a bunch of new tools pre-loaded. The chat.openai.com/chat/-tab was open on most student machines at all times.

Five days later I no longer had any doubt: this thing will transform higher education. I was one of the students. And I was blown away by what machine learning was able to do for us, in real time. And I say this as somebody who had been a hardened skeptic of the artificial intelligence hype for many years. Note that I didn’t say “likely” transform. It will transform higher education. Here’s why.

The first use-case is that the machine “filters mundane questions,” as one of our students put it quite eloquently. Meaning: you can ask the dumb questions to the AI, instead of in-class. Yes, there are dumb questions—or at least there are questions where the answer is completely obvious to anybody who knows even just a little bit about, say, malware analysis (or has done their assigned readings).

The second benefit follows: “you no longer disrupt the flow of the class,” as several students pointed out when we wrapped up — for example with a question like, “What’s an ‘offset’ in a binary file?” Or: “What is an embedded resource in malware?” You don’t want to interrupt the class — ask ChatGPT. Back in the day you had to Google for a few minutes at a minimum, jump hectically from result to result, wade through some forum, until you finally found a useful response; by then the class conversation had moved on. ChatGPT will give you the response in 5 to 15 seconds, literally. That response speed was game-changing last week, because we could keep up with the instructor in real time, reading ChatGPT’s explanation of embedded resources while listening to Juan Andres talking about the same thing.

Which in turn made the instructor more effective. Because you could compare the ChatGPT output with Juan Andres’ output in real time, next to each other, read and listen. He was our chief prompt engineer.

Another, related benefit really stunned me: everybody could keep up. We had a highly uneven level of technical expertise in the classroom, from no technical background to computer science degrees. In the before-time we would have lost at least half the class by day three. “I would have gotten lost in class several times without ChatGPT,” said Martin Wendiggensen, one of the more technical students. Same for me.

ChatGPT helped us navigate unfamiliar tools, such as IDA Pro or HIEW. What do the columns in IDA’s assembly view represent? How do I run a Python script in IDA? No longer obstacles.

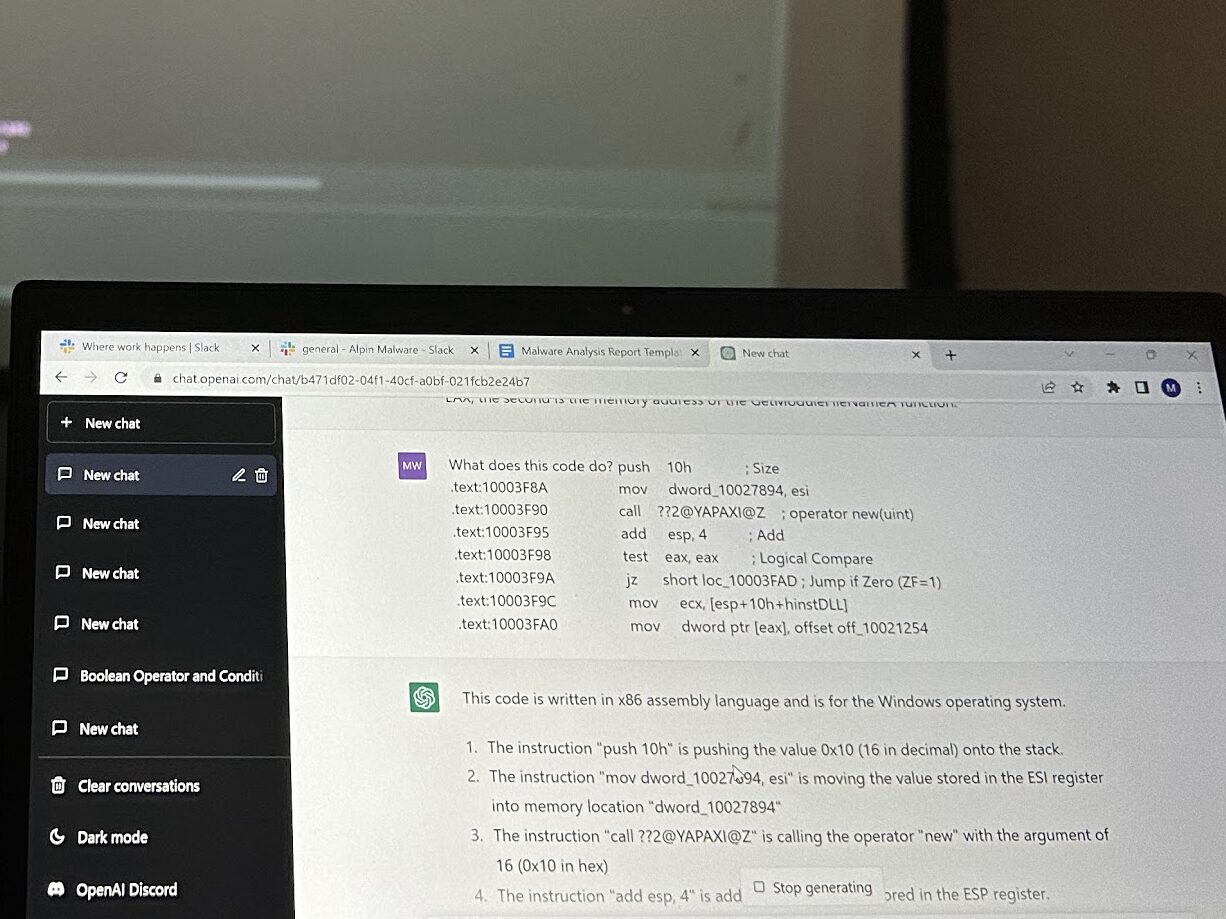

Then the bot helped the less technical students interpret code. We would just ask, “What does this do?,” or some such question, followed by any copy-pasted computer code, and ChatGPT walked us through some Python script or assembly code snippet. “I was asking it assembly questions all week,” said Keanna Grelicha, one of our MASCI students.

It didn’t stop there. The machine even did some of the script writing for us. “I think the coding was huge,” said Lee Foster, one of our adjunct professors who took the class as well. There was one moment when I thought I was in a straight-up sci-fi movie: here was Juan Andres, code tattooed on his arms, dimly lit room, laptop covered in stickers, reverse engineering interfaces in dark mode up on the wall display, and that fast tap-tap sound of confident keyboard shortcuts as we were watching in silence how ChatGPT was writing a decryptor script in python, JAGS copy-pasting the code over into a console, running it, throwing an error message back over to the AI with a terse “fix this” instruction, and then pulling the corrected code back over into the disassembler, where it would run smoothly — all in under a minute.

“It would have been very difficult to teach a class like this without having this semi-smart interlocutor,” said Juan Andres. “I wish I could sit next to every one of you and answer all your questions, but I can’t.”

“The coolest questions for me were about conceptual relationships, you can ask ‘compare A with B,’ ‘what does A mean in the context of B,’” said Oskar Galeev, a PhD student and ferocious philosophy reader. I also found the prompts for conceptual difference highly productive. For instance: “What is the difference between an API and an Import?” in the context that ongoing conversation thread on malware analysis that we kept going, the machine and me.

Of course we also saw ChatGPT’s limitations this week. Hiromitsu Higashi, a thoughtful student with an exceptionally broad range, pointed out that the system is good at some things but not as good at others: it created fake names in literature reviews, and has no concept of accuracy. “To scale it in the classroom we need to better understand its strengths and weaknesses,” said Hiromitsu. “Often the code was out of date,” added Martin, and pointed out that the language model had its knowledge cutoff in 2021, so about two years ago. Code often had evolved since. Don’t ask it to explain cryptonyms. Don’t trust book recommendations. It will hallucinate. It will make mistakes. It will perform more poorly the closer you move to the edge of human knowledge. It appears to be weak on some technical questions. Some of these limitations will be overcome by the next versions, others will not.

Last week brought two related features of artificial intelligence in education into sharp relief: the first is that all that talk about plagiarism and cheating and abuse is uninspiring and counterproductive. Yes, some unambitious students will use this new tool to cover subpar performance, and yes, we could talk about how to detect or disincentivize such behavior. The far more inspiring conversation is a different one: how can the most creative, the most ambitious, and the most brilliant students achieve even better results faster? How can educators help them along the way? And how can we both use machines that learn, and help learn, to push out the edge of human knowledge through cutting-edge research faster and in new ways?

Saturday evening it felt like we had a new superpower.