Last week, we conducted an experiment here at the Institute — teaching an intensive primer on Malware Analysis for non-technical students. Unlike beginner Malware Analysis courses that give a light smattering of approachable tools and concepts, we’d walkthrough the analysis of a single sample end-to-end: from complete unknown to deep understanding.

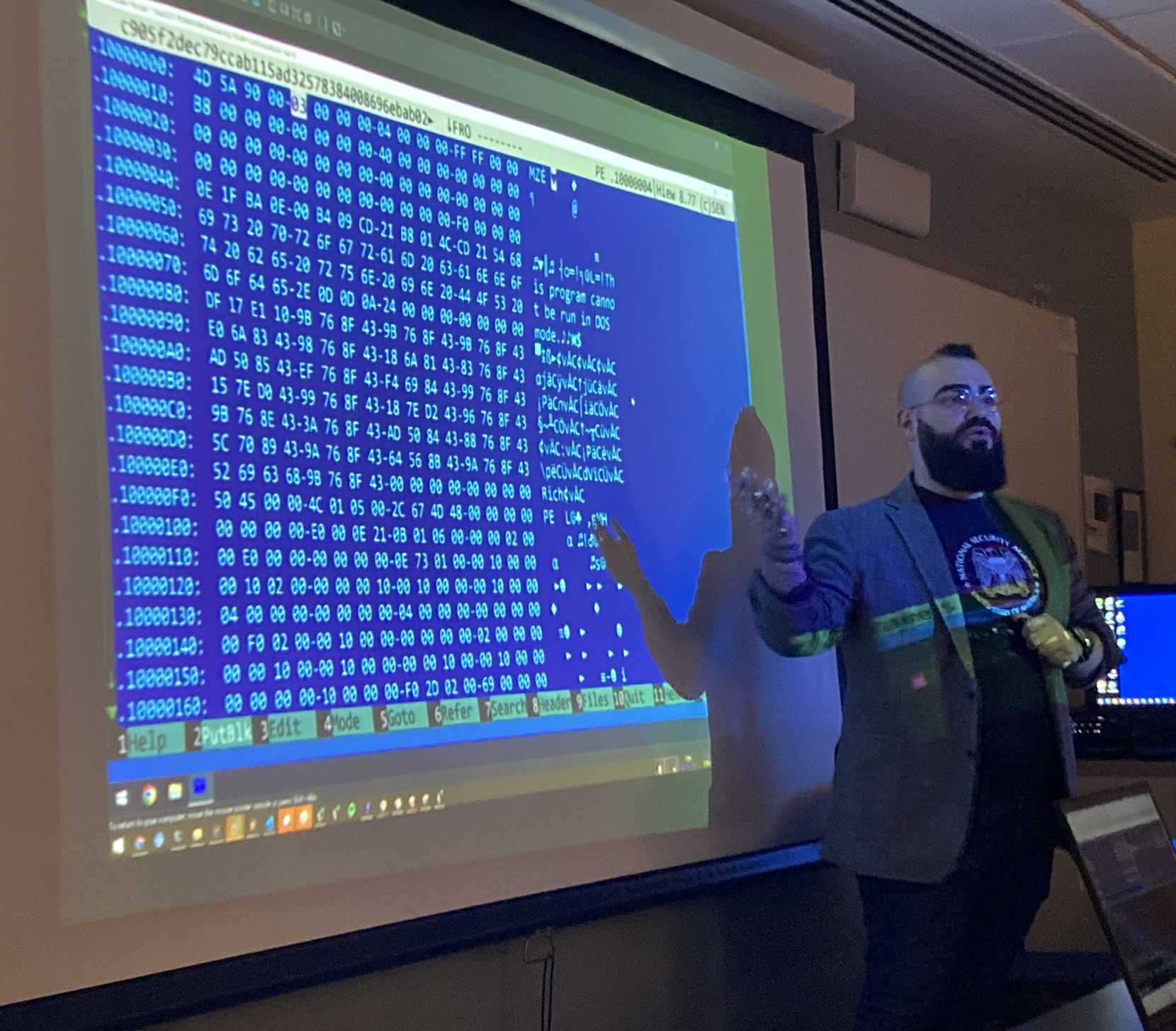

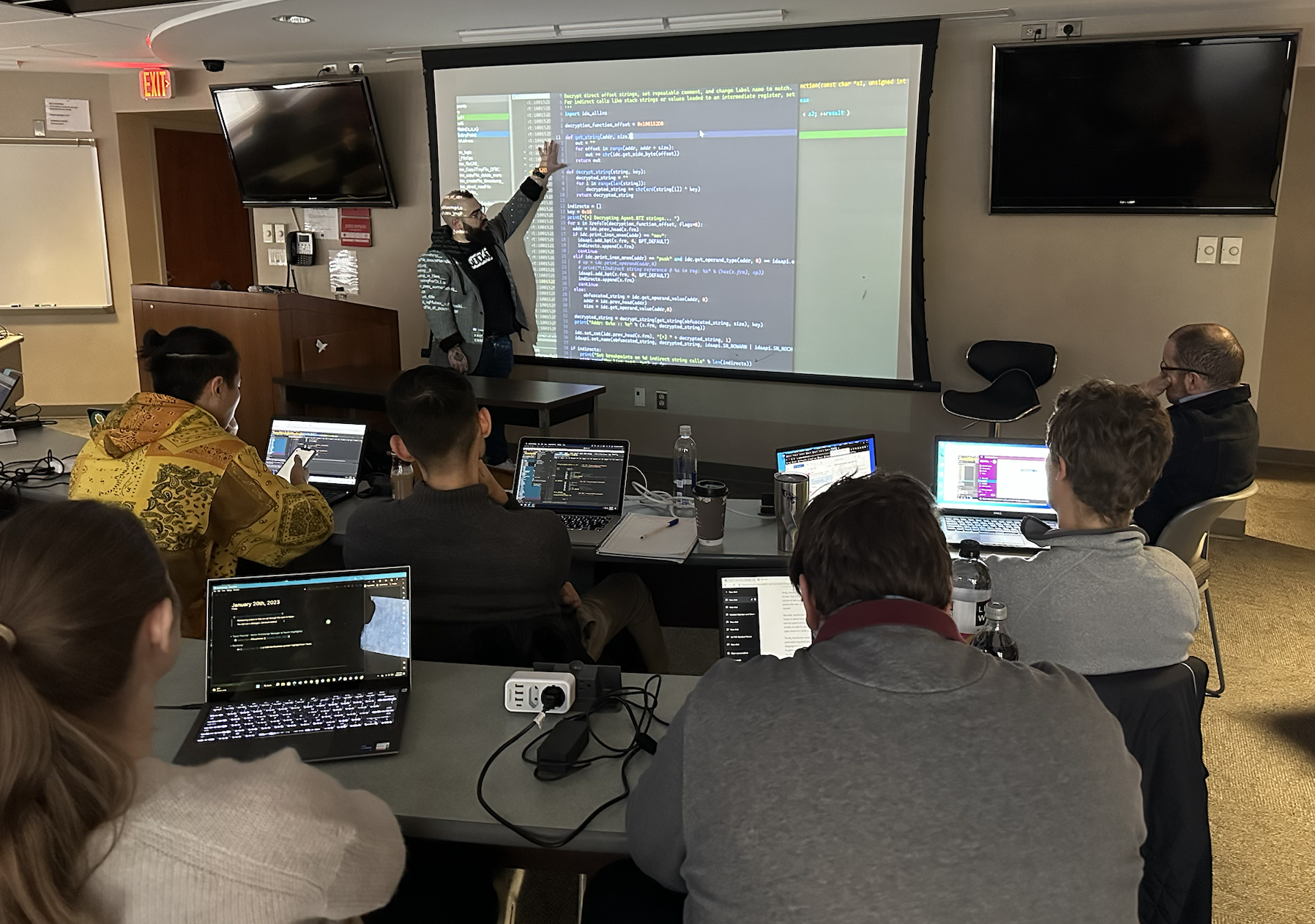

In order to keep myself intellectually honest, we plucked a malware sample I had never analyzed before –an Agent.BTZ sample. We started with the initial triage, taking an unknown executable and collecting context about what kind of file it is, doing light static analysis with the old-school but highly effective tool Hacker View (HIEW). We then proceeded into deeper static analysis of the same sample with IDA Pro, going so far as to write standalone string decrypters that we then converted into more comprehensive IDAPython scripts. Equipped with that knowledge, we engaged in dynamic analysis with x64dbg and finally coalesced all of our resulting insights into a sample Malware Analysis report.

We asked students to do an inordinate amount of preparation for a weeklong course– reading a minimum 14 chapters of Sikorski’s Practical Malware Analysis book, and a list of quick start references. And surprisingly, a majority of them did, making it possible to move quicker. Despite prep, there’s one seemingly insurmountable aspect of this subject with students of varying subject familiarity– every student was some combination of: ‘don’t know assembly’, ‘don’t know how to code’, ‘not familiar with programming concepts’, ‘hadn’t used any of these tools’, etc.

That’s where OpenAI’s ChatGPT stepped in as a teaching assistant able to sit next to each student and answer all ‘stupid’ questions that would derail the larger course. It was a first-attempt ‘TA’ that helped students refine their questions more meaningfully.

Was it ever wrong? Absolutely! And it was amazing to see students recognize that, refine their prompts, and ask it and me better questions, to feel empowered to approach a difficult side-topic by having chatGPT write a Python script or tell them how to run it and move on.

Fearmongering around AI (or outsized expectations of perfect outputs) cloud the recognition of this LLMs staggering utility: as an assistant able to quickly coalesce information (right or wrong) with extreme relevance for a more discerning intelligence (the user) to work with.

Thankfully, in a professional development course, there’s little room for performative concerns like plagiarism– you’re welcome to rob yourself- but the point here is to learn how something is done and have a path forward to the largely esoteric practice of reverse engineering malware.

I am staggered by the sincere engagement of our students. Even after 5-6 hours of instruction per day, I’d receive 11PM messages telling me they had unobfuscated a new string in the binary and wanted to understand how it might be used. They pushed themselves way past their comfort zone and in turn pushed me to produce more material on-the-fly to enable them further.

In the end, we went from some vague executable blob to recognizing how an old Agent.BTZ sample went about infecting USBs of U.S. military servicemen, unobfuscate hidden strings, resolve APIs, establish persistence, and callout to a satellite hop point to reach a hidden command-and-control server.

This was a purely experimental endeavor in the hope of bolstering meaningful cybersecurity education. Some students may choose to further engage malware analysis, many more will hopefully enter the larger policy discussions around this subject with a rare first-hand grasp of the subject at hand and give the discourse a much needed boost in accuracy.

I’d be remiss not to thank HexRays for educational access to IDA Pro, Stairwell for access to their Inception platform for all students, and OpenAI for inadvertently superpowering our educational experiment.